Can you defend against an attack that rewrites itself faster than your security team can respond?

Cardonet has been looking after clients’ IT for more than 25 years. In that time the security threats have changed constantly, but this feels different.

AI has moved from being a “nice to have” innovation to the engine behind a growing chunk of cybercrime and is now the main way defenders keep up.

Recent analyses suggest roughly one in six cyber incidents now involve AI on the attacker’s side, especially in phishing, fraud and social engineering. UK fraud and cyber loss data shows AI‑assisted scams are costing businesses tens of millions of pounds through deepfake‑driven investment fraud and sophisticated social engineering.

If you own or run a business, this isn’t abstract. It’s about whether you can keep trading when a criminal uses your own data, your brand, even your voice against you, and whether your insurance will still pay out when that happens.

How criminals are using AI today

Let’s start with how attackers are using AI right now, because this is what you’re up against.

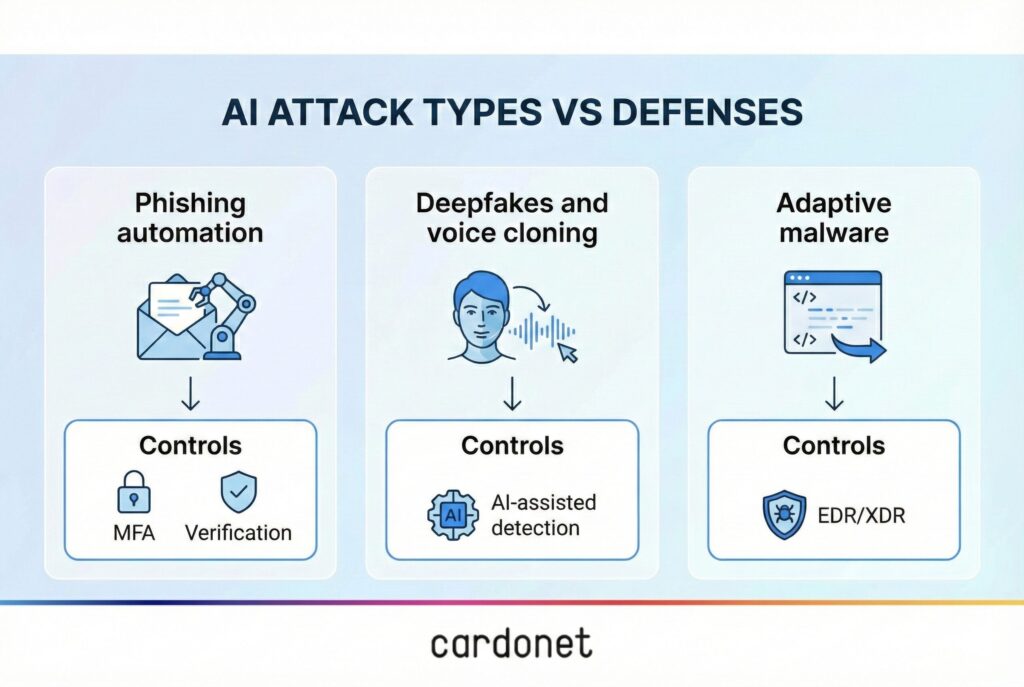

1. Smarter, faster phishing

A few years ago, most phishing emails were full of spelling mistakes and odd phrasing. Today that’s the exception. Attackers feed public information about your business, your sector and your people into large language models and generate thousands of emails that read like a real supplier, a real colleague, a real customer.

- Messages are tailored to specific roles and current projects.

- Tone of voice can be tuned to match the organisation they’re impersonating.

- Replies are handled automatically, so the conversation feels natural.

You don’t need a big criminal gang to do this anymore. One person with the right tools can run a campaign that looks and feels like it’s coming from a well‑resourced fraud operation.

2. Deepfakes and voice cloning

The same thing is happening with audio and video. Recent UK data puts losses to investment scams in the first half of 2025 at close to £100 million, with AI deepfakes playing a significant part in making those scams so convincing. Global analysis shows deepfake volumes and associated fraud attempts rising sharply over the last two years.

Patterns seen in real incidents include:

- A “CEO” or “FD” on a video or Teams call approving an urgent payment.

- A “supplier” on a video call asking to change bank details.

- Voice messages that sound exactly like your senior people, asking for exceptions to normal controls.

Under pressure, people act. If your approval process assumes “I saw them / I heard them” equals “it’s safe”, you are now exposed.

3. Malware that learns as it goes

The third big shift is on the malware side. There are now malware families that use AI to change how they behave mid‑attack. Threat intelligence teams have documented samples that call out to AI services for new ways to obfuscate code, avoid detection or move sideways once they are inside a network.

In simple terms:

- Traditional antivirus looks for known bad patterns.

- AI‑driven malware keeps changing those patterns.

- Once inside, it can adjust what it does in response to what your tools are blocking.

That’s why “we’ve got antivirus and a firewall” is no longer a serious answer. It’s like turning up to a Formula 1 race with a 1990s family saloon and hoping for the best.

When AI is inside your own tools

There’s a second layer to this: attackers are starting to go after the AI models inside your own tools.

Adversarial machine learning is a set of techniques where attackers deliberately craft inputs that cause AI systems to make the wrong decision – classifying something malicious as safe, or flagging something harmless as a threat. That can mean:

- Emails tweaked just enough to slip past AI spam filters.

- Network behaviours shaped to look “normal” to an anomaly detector.

- Poisoned data slipped into training sets so future models are blind to certain patterns.

Most mid‑market businesses don’t have data science teams to reverse‑engineer why a model made a bad call. They only see the outcome: “we had the tech, and it still got through.”

That doesn’t mean AI in security is a mistake. It means you have to treat your own AI stack as something that needs securing in its own right, not just a magic box that “does security”.

Your insurance costs are already feeling this

Now to the part that directly hits your P&L: cyber insurance.

The UK numbers are stark. Trade body data shows cyber insurance payouts for UK businesses reached around £197 million in 2024 – roughly THREE-TIMES the level of the previous year – with malware and ransomware accounting for just over half of all claims. Put simply, more attacks, more damage, bigger cheques.

Premiums and deductibles

In some quarters you may see average cyber premiums dip slightly where competition heats up, but the long‑term direction is obvious: up. As expected losses increase, insurers price that in. Expect:

- Higher base premiums at renewal over the medium term.

- Larger deductibles before the policy responds.

- Tighter sub‑limits for ransomware, funds transfer fraud and business interruption.

- Tighter minimum security requirements

Underwriters now routinely insist on a baseline of controls before they will quote.

Common requirements include properly deployed endpoint detection and response, multi‑factor authentication on email and critical systems, and privileged access management for admin accounts. Questions around fraud controls, call‑back procedures and how you verify high‑risk changes are also becoming normal, particularly given the rise of deepfake‑enabled scams.

If you can’t show that you’ve done the basics, you may find:

- You can’t get cover at all at the limit you want.

- You get cover, but claims are challenged if something goes wrong.

- You are pushed into a more expensive, “high‑risk” bracket.

- Exclusions and grey areas around AI

There’s also a quieter shift happening in policy wording.

Some policies now include language around “AI‑driven” or “synthetic” attacks, and some legal commentators have flagged the risk of exclusions or narrow interpretations in those areas. Another subtle problem is where a policy defines a “hacker” as an individual person, when in reality the actor is a semi‑autonomous tool being steered by a person in the background.

In a major loss, that wording will get tested line by line. You don’t want to be having that argument for the first time after the event.

So the real question is this:

Given how prevalent and expensive AI‑driven cyber fraud has become – and with insurance payouts and scrutiny rising – how will you cope when your premiums go up and your cover gets tighter?

What good defensive AI looks like in practice

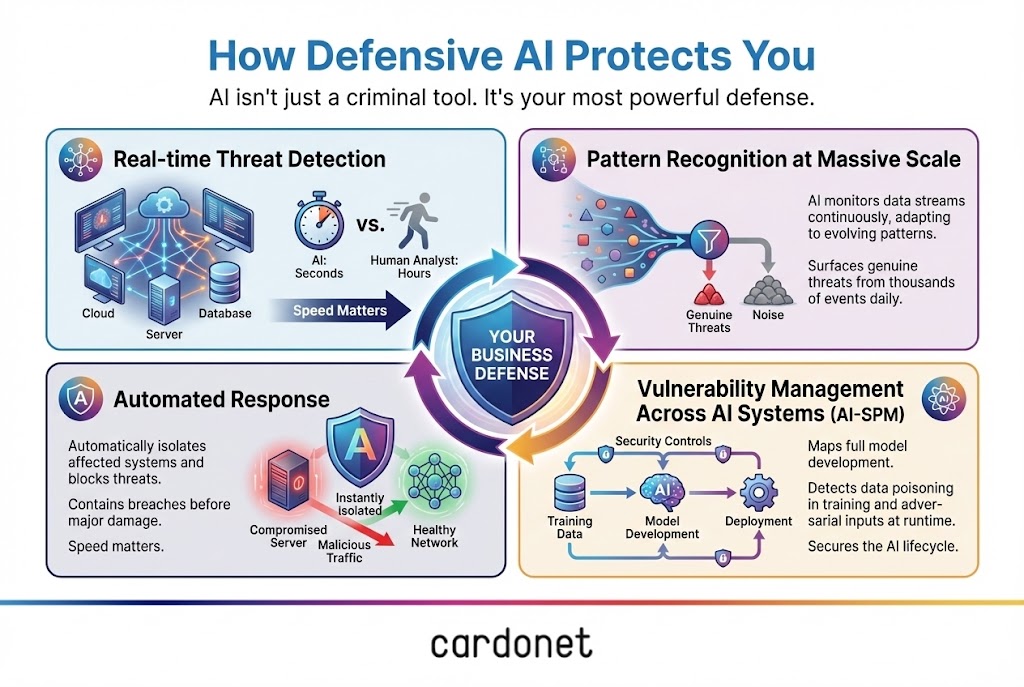

The good news is you can use AI defensively to close a lot of these gaps. But it has to be done in a structured way. In our work with clients, a realistic AI‑driven security stack usually has four layers.

1. Strong identity and access as the foundation

Before you even talk about AI, get identity right:

- Multi‑factor authentication on email, VPN and all remote access.

- Role‑based access so people only see what they need.

- Extra checks for finance and admin accounts, including out‑of‑band verification.

This is what insurers expect now, and it’s the cheapest way to blunt a lot of AI‑powered phishing and deepfake attempts, because even if someone is fooled, the attacker still struggles to log in.

2. AI‑assisted detection and response

The second layer is where AI really starts earning its keep:

- Analysing endpoint, identity, email and network telemetry in close to real time.

- Spotting unusual patterns – unfamiliar logins, odd data movement, strange process activity.

- Correlating events across systems so your team sees the whole story, not isolated alerts.

Used well, AI here gives your team a realistic chance of spotting and containing an attack before it becomes a full‑blown incident. Think of it as giving your analysts a power tool, not replacing them.

3. AI Security Posture Management (AI‑SPM)

If you’re using AI or machine learning internally – for customer scoring, operations, or internal automation – you now have to think about the security posture of those systems:

- Where do these models live – on‑prem, in specific cloud services, or embedded in vendor products?

- Who can change their configuration or training data?

- How do you monitor them for misuse, drift or unexpected behaviour?

Specialist frameworks and tools have emerged to sit across this lifecycle and flag misconfigurations, exposed interfaces and unusual activity. Insurers and regulators are starting to ask about this, particularly in sectors where AI models touch sensitive data.

4. People, process and testing

None of this works without people and process:

- Regular briefings on AI‑enhanced phishing, deepfake fraud and new scam patterns.

- Simple, enforced rules for verifying high‑risk requests (bank changes, large transfers, credential resets).

- Periodic testing: simulated phishing, table‑top exercises and, where appropriate, red‑team scenarios that include AI‑based attack methods.

If your people know that “I saw them on a call” is not enough to bypass controls, you drastically reduce the odds of a single deepfake ruining your month.

Where the UK government and regulators are heading

It’s not just insurers and criminals paying attention.

The UK government’s own assessment of generative AI is clear: across the current period to 2025, AI is expected to amplify existing cyber risks rather than create entirely new categories, but it will significantly increase the speed and scale of some attacks. That’s already visible in the volume of phishing, the pace of fraud campaigns, and the way exploits are packaged and industrialised.

In response, the proposed Cyber Security and Resilience Bill is designed to tighten expectations on operators of essential services and important digital providers, and to put more onus on boards to understand and manage cyber risk, including AI‑related risk. At the same time, the NCSC’s latest reviews talk about record numbers of nationally significant incidents with ransomware and state‑linked activity heavily involved.

For a mid‑market business, the message is straightforward: the environment you operate in is being treated as structurally higher‑risk. Your customers, insurers, regulators and suppliers will all gradually increase what they expect from you.

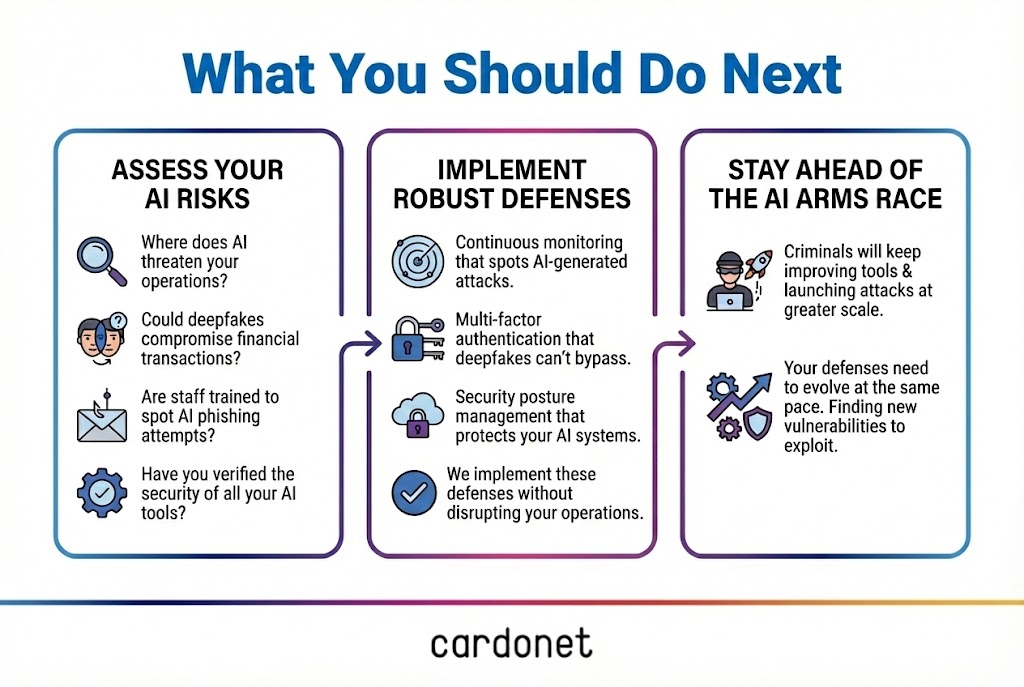

What this means for you, practically

So what should you actually do?

If we were sitting down together, these are the questions I’d be asking.

- Where would an AI‑enhanced attack hurt you most?

- Payments and finance?

- Customer data and trust?

- Operational technology and uptime?

You don’t have to fix everything at once. You do need a clear view of your critical points of failure so investment goes where it matters most.

- What are you already doing that just needs tightening?

Most organisations already have some building blocks: MFA on email, a modern endpoint solution, some level of awareness training. The quickest wins usually come from:

- Turning “optional” security features on and enforcing them.

- Closing the gap between policy and what really happens under pressure.

- Improving monitoring so you see issues earlier and in context.

- How can AI support your existing team, not replace it?

If you’re like most of our clients, the question isn’t “how do we add more tools?” but “how do we give this team better signal and less noise?” That’s where AI‑backed detection, correlation and sensible automation add real value.

- How can I minimise insurance costs/maximise coverage down the line?

Before renewal, it’s worth sitting down with your broker and asking three direct questions:

- Which controls will underwriters expect to see this year?

- Where are the likely exclusions and grey areas around AI and fraud?

- What evidence will we need to present if we make a claim?

Then work backwards. That conversation should drive a lot of your 2025–26 cyber and AI security roadmap.

How Cardonet can help

This is where our team comes in.

We work with businesses across sectors – professional services, hospitality, creative industries, education and more – who are all asking the same thing: “How do we keep people productive and the business moving, without leaving the door wide open to AI‑driven attacks?”

In practical terms, that usually means:

- Assessing your current security posture with AI in mind: where you’re strong, where you’re exposed, and what your insurers and regulators will see.

- Designing a realistic roadmap: which controls to implement in which order, aligned to your budget, risk and sector.

- Implementing the right mix of identity, endpoint, network, cloud and AI‑focused controls – making sure they genuinely work together.

- Training your people so they know how to respond to AI‑enabled threats, not just old‑fashioned phishing.

- Helping you evidence all of this to boards, auditors, insurers and customers.

You don’t need a Hollywood‑style “AI cyber lab”. You need a clear view of your risks, the right foundations in place, and a partner who will tell you plainly where you stand and what to do about it.

If you’d like that level of clarity, talk to us. We’ll walk you through where AI changes your threat profile, what it means for your insurance, and how to get from where you are now to a position where you can say: “We’re not bulletproof, but we’re not a soft target either.”

FAQs

How worried should I really be about AI‑driven attacks if I’m an SME, not a big bank?

You should be concerned, but not paralysed. The data shows AI isn’t just being used against big enterprises – smaller firms are being hit with AI‑assisted phishing, fraud and account takeover because criminals know mid‑market controls are often weaker. The good news is that a handful of well‑chosen controls – strong identity, basic AI‑assisted detection, and tighter processes around payments – removes a lot of easy opportunities and makes you a harder target than the business down the road.

What are the first practical controls I should put in place for AI‑enhanced phishing and deepfakes?

Start with three basics: multi‑factor authentication on email and remote access, clear verification rules for money movements and bank detail changes, and regular awareness sessions that specifically cover deepfakes, voice cloning and realistic AI‑generated emails. Then add monitoring that can spot unusual logins and data movement, so you’re not relying on someone “having a bad feeling” as your main line of defence.

How is AI changing what insurers expect from me at renewal – and what happens if I fall short?

Insurers are seeing claims rise sharply and are reacting by asking harder questions about your controls, including how you manage phishing, ransomware and fraud risks that are now AI‑enabled. If you can’t show basics like MFA, endpoint protection, privileged access controls and sensible fraud procedures, you’ll either pay more, get lower limits, or find claims scrutinized much more aggressively when something goes wrong.

Can AI‑powered security tools actually help a small or mid‑sized team, or will they just create more noise?

Used badly, they absolutely can create more noise. Used properly, they reduce noise by correlating events across email, identity, endpoints and cloud services so your team sees a smaller number of better‑quality alerts. The aim is not to replace your people but to give them better signal so they can focus on real incidents instead of chasing hundreds of low‑value warnings.

If I suspect an AI‑driven attack – a deepfake call, odd payment request or strange system behaviour – what should my team do in the first hour?

First, don’t panic and don’t act alone. Pause any requested payments or changes, verify the request using a separate, trusted channel, and capture evidence (emails, call logs, screenshots) before anything is deleted. In parallel, isolate any affected accounts or devices, reset credentials where necessary, and escalate to your IT/security partner so they can review logs and contain any intrusion before it spreads.

You must be logged in to post a comment.